Artificial Intelligence and its branches such as machine learning quickly gaining in popularity with both users and developers alike. While there is no single recipe to creating the next greatest app that everyone will use, but I can guarantee that one of the ingredients in the usage of thoughtfully incorporated AI.

But creating your own AI solution can be very complex and tricky. The good news is, there are already some very powerful and easy-to-use solutions provided by companies such as Google, Amazon, and Microsoft. These comanies offer services and libraries with popular features such as Face Recognition, OCR (Optical Character Recognition), Classification Model, and so on. (References and links can be found at the end of this post).

One solution that we had chance to use here at Avenue Code is the Google Mobile Vision API, which is part of the Google Play service in the Android platform. Some features of this library are not exclusive to Android. For instance, iOS developers can also use the Face Recognition API - check out the documentation! This post is dedicated to how we can use Face Recognition in our app with Google Mobile Vision API.

Creating the next MSQRD or Snapchat is not rocket science with MV API. To quote the API description: “It's designed to better detect human faces in images and video for easier editing. It's smart enough to detect faces even at different orientations -- so if your subject's head is turned sideways, it can detect it. Specific landmarks can also be detected on faces, such as the eyes, the nose, and the edges of the lips.”

So let’s get started! First of all, create a project in Android Studio. In order to use the Google Mobile Vision API your gradle file from the application level should import this dependency. At the time of this post, the current version of play-service-vision is 10.2.4. You can check out the documentation here: https://developers.google.com/android/guides/setup

compile 'com.google.android.gms:play-services-vision:10.2.4'

Your Android Manifest file should enable the service. Place this below code in the Application tag.

<meta-data android:name="com.google.android.gms.vision.DEPENDENCIES" android:value="face"/>

Our app is based on the Google sample code for the Camera2 API. To focus on how we can use the Face Recognition API, we will abstract the camera layer and go directly to the heart of what this post is all about. If you want more details on how the camera2 API works, please take a look at the github repository:

https://github.com/googlesamples/android-Camera2Basic

The architecture of our application consists of two packages: camera and face_recognition. The camera package is entirely based on camera2basic repository, with the only extra method being the startFaceRecognition in FragmentCameraPreview:

private void startFaceRecognition() {

Bundle bundle = new Bundle(); bundle.putSerializable("image", mSavedFile);

FaceRecognitionFragment frResultFragment = new FaceRecognitionFragment();

frResultFragment.setArguments(bundle);

getFragmentManager().beginTransaction().replace(R.id.container,

frResultFragment).commit();

}

This method only retrieves the photo that user has taken. The result of the recognition lays on the FaceRecogntionFragment. Before we can actually call the API, we need to prepare our ImageView to display the processed result. We are writing on ImageView itself so we need Bitmap, Paint and Canvas for this to work

BitmapFactory.Options options = new BitmapFactory.Options(); options.inMutable = true; Bitmap bitmap = BitmapFactory.decodeFile(file.getAbsolutePath(), options);

We need to make the Bitmap mutable so we can write on it later - otherwise we will end up with an exception like this: java.lang.IllegalStateException: Immutable bitmap passed to Canvas constructor

Then do the little red circles which shows parts of the face:

Paint rectPaint = new Paint(); rectPaint.setStrokeWidth(5); rectPaint.setColor(Color.RED); rectPaint.setStyle(Paint.Style.STROKE);

And finally, create the canvas where these red circles will be drawn:

Canvas canvas = new Canvas(imageBitmap); canvas.drawBitmap(imageBitmap, 0, 0, null);

Face Recognition Coding

Up to this point, nothing magical is happening. We're only warming up the stage for the big player: FaceDetector.

Beware when importing this dependency: there are two FaceDetectors, one from android.media which we are NOT interested in, and the one from android com.google.android.gms.vision.face package. This is our guy.

FaceDetector faceDetector = new FaceDetector.Builder(getActivity())

.setTrackingEnabled(false)

.setLandmarkType(FaceDetector.ALL_LANDMARKS)

.build();

First we need to setTrackingEnabled to false to get better performance and accuracy for single image processing. We enable this property for live video, for example.

Next we need to check if the service of Face Detector is operational:

if (!faceDetector.isOperational()) {

new AlertDialog.Builder(getActivity()).setMessage("Could not set up the face

detector!").show(); return;

}

Sometimes it takes a little while to download this dependency.

Now let’s detect faces! Create a frame using the Bitmap, then call the detect method on the FaceDetector, using this frame, to get back a SparseArray of Face objects:

Little Challenge

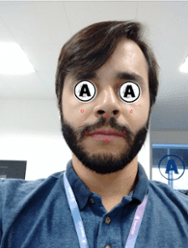

To make this app funnier let’s recognize both eyes and replace with the Avenue Code Big ‘A’ icon, like this:

For this, create a Bitmap the references this resource:

Bitmap acBitmap = BitmapFactory.decodeResource(getResources(), R.drawable.ac);

It’s very easy to retrieve the coordinates for right and left eye. Just loop through the sparseArray and get the landmark of each face like this:

for (int i = 0; i < faces.size(); i++) {

Face face = faces.valueAt(i);

for (Landmark landmark : face.getLandmarks()) {

int cx = (int) (landmark.getPosition().x);

int cy = (int) (landmark.getPosition().y);

float radius = 10.0f;

if(landmark.getType() == Landmark.LEFT_EYE

|| landmark.getType() == Landmark.RIGHT_EYE) {

canvas.drawBitmap(acBitmap, cx - 100, cy - 100, null);

} else {

canvas.drawCircle(cx, cy, radius, rectPaint); }

}

}

And finally just set the result Bitmap:

mImageView.setImageDrawable(new BitmapDrawable(getResources(), imageBitmap));

Conclusion

That’s it. Easy and painless. With less than a line of code, we have applied an AI in our app. You can checkout this project in github: https://github.com/heitornascimento/mobile_vision_face_recognition

This is a tiny little glimpse into what you can do with the Google Mobile Vision API - there's plenty more to learn and play with! Hoping this was fun and enjoyable - questions? Feedback? Leave them in the comments!

References:

Google Mobile Vision API: https://developers.google.com/vision/

Google AI Cloud Solution: https://cloud.google.com/products/machine-learning/

Amazon: https://aws.amazon.com/machine-learning/

Microsoft: https://azure.microsoft.com/en-us/services/machine-learning/

Camera2 Sample Repository: https://github.com/googlesamples/android-Camera2Basic

Google Code Lab: https://codelabs.developers.google.com/codelabs/face-detection/

Author

Heitor Souza

Heitor Souza is an Android Engineer with experience in Java, Ruby on Rails, and Salesforce platform. He's also a bassist in his spare time.