Containers, iterables, iterators, and generators are important concepts in Python that can help you create better solutions and use less of your computer resources, such as memory. All of these are abstract base classes in Python, and in order to utilize them, we need to implement some methods in our class.

| Abstract Base Classes | Inherits From | Abstract Methods | Mixing Methods |

| Container | --contains-- | ||

| Iterable | --iter-- | ||

| Iterator | Iterable | --next-- | --iter-- |

| Generator | Iterator | send, throw | close, --iter--, --next-- |

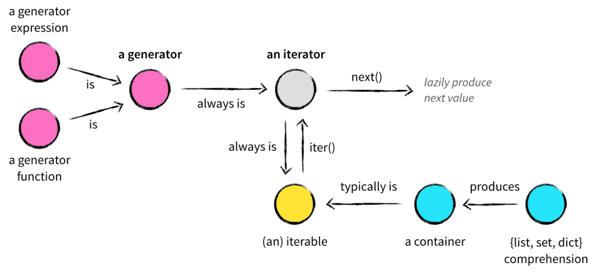

Containers normally have a way to iterate over the objects that they contain, and a generator is always an iterator. So all the relationships between these concepts can be confusing. Since it might not be clear what each one does, I've included the image below to explain the relationships between them more precisely.

Image courtesy of nvie.

Before we start talking about all these concepts, let me explain a little bit about dunder methods.

Dunder Methods

Dunder methods, or magic methods, are special methods in Python that you can use to enrich your classes. The term "dunder" stands for “double under” and is used because these special methods have a double underscore in their prefix and suffix.

This is a language feature that allows you to define your behaviors for the classes. A very commonly used dunder method is __init__, which works like a constructor in other languages. It’s called after the instance has been created but before it is returned to the caller.

Containers

Containers are data structures that hold other objects and support membership tests using the in operator via the __contains__ magic method. Tuple, list, set, and dict are examples of containers. Usually, containers provide a way to access the contained objects and to iterate over them.

An interesting container example is str in Python. We use this all the time and maybe don’t even notice that it's also a container.

>>> foobar = 'foobar'

>>> 'foo' in foobar

True

>>> 'bar' in foobar

True

>>> 'potato' not in foobar

True

As you can see, string is a classic container example since it contains all its substrings, and this feature is commonly used on a daily basis.

Iterables and Iterators

An iterable is any object that can return an iterator, and an iterator is the object used to iterate over an iterable object. It works like a lazy factory that is idle until you ask it for a value. Note that every iterator is also an iterable, but not every iterable is an iterator. Confused? Let’s look at some examples.

>>> numbers = [1, 2, 3, 4]

>>> type(numbers)

<class 'list'>

>>> it = iter(numbers)

>>> type(it)

<class 'list_iterator'>

>>> it.__next__() # same as next(it)

1

>>> next(it)

2

List is a great example for explaining the difference between an iterable and an iterator, because list is an iterable but not an iterator. You can see this because when we call it = iter(numbers), it returns a different class called list_iterator, which is the class responsible for iterating over all elements in this list.

To make this possible, the class of an object needs to implement __iter__ method, which returns an iterator that defines the method __next__. Most of the time when we call iter(), objects return themselves to be iterated, but as we've seen in the example above, iteration methods can be implemented in different classes.

Behind the scenes, the for statement calls iter(), which returns an iterator object that defines the method __next__ , which accesses one element at a time. When there are no more elements, __next__ raises a StopIteration exception that tells the for loop to terminate.

Generator

Generators are simpler and more elegant than iterators; they work the same way but are written differently. When you call a generator, it doesn't return a single value; instead, it returns a generator that supports the iterator protocol. So, it can be thought of as a resumable function.

Anything that can be done with generators can also be done with class-based iterators.

The magic word yield is responsible for this behavior; it’ll return a value when the generator’s __next__() method is called, just like a return statement.

The difference is that it’ll suspend the execution. By suspend, we mean that the local state is retained, including local variables, instruction pointer, internal evaluation stack, and the state of any exception handling.

>>> def fib():

... prev, curr = 0, 1

... while True:

... yield curr

... prev, curr = curr, prev + curr

>>> f = fib()

>>> f

<generator object fib at 0x108433c50>

>>> list(islice(f, 0, 3))

[1, 1, 2]

>>> f.__next__()

3

>>> next(f)

5

This makes the function easier to write and much clearer than an approach using instance variables. So, on the next call, it’ll resume at the same point that yield returned the value.

The above Fibonacci generator example will generate an infinite sequence of numbers, but what if we would like to keep it finite? How can we do that? You may think: “Let’s just throw a StopIteration exception." Unfortunately, this won’t work. If we throw this exception by ourselves, the for loop won’t be able to handle the error, and the exception will be thrown by the for loop as well. The correct way to do it is to use the return statement. In a generator function, the return statement indicates that the generator is done and will cause StopIteration to be raised. Here's what the solution will look like:

>>> def fib(n = 7):

... prev, curr = 0, 1

... curr_n = 0

... while True:

... if curr_n == n or n < 1:

... return

... yield curr

... curr_n += 1

... prev, curr = curr, prev + curr

>>> for f in fib():

... print(f)

1

1

2

3

5

8

13

As you can see, generators and iterators are very similar. They work in pretty much the same way, but generators have three functions: send(), throw(), and close(). Let’s imagine a scenario where we need to add many people to a database, but this is inside a loop since we need to process their information before inserting it to the database. Generators could solve your problem in this situation.

|

>>> def add_person_to_database(host='localhost', port=27017): |

This is a simple example of how to use the power of generators. The send() method will pass the value into the generator, so the yield is also responsible for receiving variables that are passed by the send method. The throw() method is used to raise an exception inside the generator, and it’s raised by the yield expression. Close() method raises a GeneratorExit exception (behind the scenes it uses the throw method to raise this exception) inside the generator to terminate the iteration.

List Comprehension vs Generator Expression

As we saw, generator is a function created using a yield statement to deliver data on demand. But there is a simpler way to create generator without the keyword yield, and it’s called generator expression.

>>> square_list = [x * x for x in range(6)]

>>> square_gen = (x * x for x in range(6))

>>> print(square_list)

[0, 1, 4, 9, 16, 25]

>>> print(square_gen)

<generator object <genexpr> at 0x10358abd0>

>>> next(square_gen)

0

The syntax between these is very similar, but unlike list comprehension, generator expressions don’t construct list objects. Instead, they return a generator object that we can iterate over just as we do for generator functions. The main advantage is that generator takes much less memory.

Conclusion

These concepts can help you to create cleaner and lighter code and to understand how coroutines work in Python. As an exercise, you should start searching for for loops that can be replaced by generators. This can help you gain a better understanding of the topic and also improve your code.