Last week, we discussed the core concepts of event-driven architecture (EDA). Today, I will present a concrete way to help you understand those concepts. As programmers or architects, usually the best way to learn is to code. So let's get started!

KAFKA Installation on MacOS

First of all, we need to have Kafka installed. As I mentioned in part one of this snippet, Apache Kafka is an excellent framework to work with event streams. Since MacOS is the environment in which our example project was created, we just need to follow a few steps, using Homebrew, in order to install Kafka:

- 1. In your terminal window, run the following code:

brew install kafka

- 2. After the installation is complete, you can check to ensure that Kafka was successfully installed. In order to run Kafka, we also need to execute Zookeeper. Zookeeper is a dependency that was also installed, and it needs to be running when Kafka starts.

Zookeeper-server-start /usr/local/etc/kafka/zookeeper.properties

kafka-server-start /usr/local/etc/kafka/server.properties

3. If everything goes successfully, your Kafka will not have any log errors and will be attached to your terminal window.

Now that we have Kafka, we need to create our topic before showing the code. In other words, we will create the place where our events will be written by producers and consumed by consumers. In another terminal window, run the following code.

kafka-topics --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic EMAIL_TOPIC

EDA as Java Code

After we have Kafka up and running on our development environment, it’s time to code with our preferred IDE. In the example below, we have the representation of the state change on the service that persists the user information from a fictional company. When a user changes any information, the service sends an event to a Kafka topic called EMAIL_TOPIC. This event is going to be read by another service that handles the emails and its templates and then sends them to the corresponding user.

Project Dependencies

This project has some dependencies to be added in your pom.xml or build.gradle file in order to work. This example uses maven - pom.xml - with the dependencies below.

Coding Your Producer

Since this project is just an example without many business rules that you might encounter in a real-life project, we will simulate how a producer or a microservice producer writes an event in the EVENT_TOPIC when it updates a single user. This means it has the user class with an address attribute.

When changing the address, the UserService is invoked to process this request through the method updateUser. UpdateUser updates only the user address and sends an event to EMAIL_TOPIC explaining that one event in the system happened, which in this case is CHANGE_ADDRESS_CONFIRMATION. The email, contained in the event, is going to be used by the system to send the confirmation email.

The messageQueue class is where the magic happens. Using Kafka dependencies, and setting up some basic configurations, we call KafkaProducer to send our event to the topic to be read by the consumer.

For testing purposes, we just create a simple class with a main method that will execute our service code. In the real world, it could be a microservice endpoint that updates the user address, for example.

Coding Your Consumer

On the consumer side, which may also be a microservice, we have an events enum with the type of event that we are expecting, as well as an event information class that will hold our upcoming event information.

In order to keep our project simple, I didn't add any fictional business rules. After we set up Kafka parameters for our consumer, MessageQueueConsumer keeps waiting and processing information that is being received and parsed to one EventInformation class instance that can be used to do some action, which in this case would be sending an address change confirmation email.

Also for testing purposes, we will just create a simple class with a main method that will execute our code.

Executing the Example

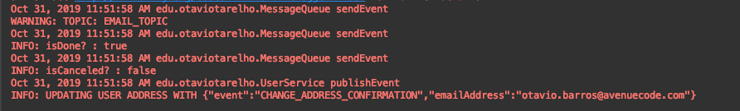

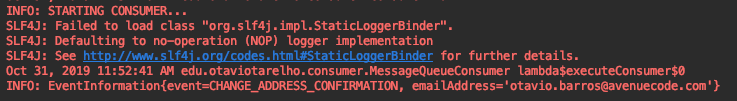

With Kafka and Zookeeper up and running, it's time to execute our producer and consumer. Since we never executed and published anything in our topic, our topic is going to be empty until we start our producer. Due to this fact, our consumer is set up to read from the beginning of the offset. When executing the Producer.main() and Consumer.main(), you will get the messages below.

Producer.main()

Consumer.main()

Conclusion

Hopefully, after reading parts one and two of this snippets series, you can visualize the concept and execution of EDA. Even though this approach has its downsides, like difficulties in controlling flow, a high demand for DevOps, and complex debugging problems, "EDA has been widely used in decoupled systems to help companies avoid the structural problems of monolith systems.

Also, there are many event stream frameworks like Apache Kafka that help you and reduce your workload during the implementation of EDA. When choosing an architectural pattern for your project, don't forget to choose the pattern that meets your business requirements.

You can find the source code for this project here.

Author

Otavio Tarelho

Otavio is a Java Engineer at Avenue Code. He always searches for new things to learn, and not necessarily just about Java. He attributes his programming passion to the game The Sims, to which he was addicted when he was a kid.