There's a lot to consider when you're designing toolchains for applications' continuous integration and continuous deployment (CI/CD). Today, we'll describe what's involved in migrating an app that's on-premises into the AWS cloud.

Getting Started

WHAT DOES ON-PREMISES MEAN?

On-premises refers to installations owned/maintained by the company, where software can live and be happy, or not so happy when there is an outage or disruption on the infra that brings servers and/or applications down, or even when hardware capacity reaches its limits with more users than expected accessing systems simultaneously. On the other hand, the control over the infra is all in the organization's hands, so there are lots of pros to having applications on-premises. What's best for a company depends on its situation and needs, but that is a topic for a separate post.

On-premises refers to installations owned/maintained by the company, where software can live and be happy, or not so happy when there is an outage or disruption on the infra that brings servers and/or applications down, or even when hardware capacity reaches its limits with more users than expected accessing systems simultaneously. On the other hand, the control over the infra is all in the organization's hands, so there are lots of pros to having applications on-premises. What's best for a company depends on its situation and needs, but that is a topic for a separate post.

WHICH TOOLS ARE USED FOR CI/CD?

According to Agile practitioners, continuous integration and continuous deployment are not tied to specific tools used, but rather to a certain mindset. In other words, practices were developed based on a good definition of the process for developing stable software, even where these practices involve a need for human intervention, such as manual approval for pushing changes to production. Thus, applying CI/CD for an application does not mandate the adoption of specific tools, nor does it mandate having the app either on-premises or on the cloud. Again, this subject also deserves a dedicated article of its own.

According to Agile practitioners, continuous integration and continuous deployment are not tied to specific tools used, but rather to a certain mindset. In other words, practices were developed based on a good definition of the process for developing stable software, even where these practices involve a need for human intervention, such as manual approval for pushing changes to production. Thus, applying CI/CD for an application does not mandate the adoption of specific tools, nor does it mandate having the app either on-premises or on the cloud. Again, this subject also deserves a dedicated article of its own.

WHAT ARE THE BENEFITS OF MOVING TO THE CLOUD?

As with every decision in life, there are pros and cons to moving to the cloud, but it's unquestionable that doing so can help companies save tons of money in the mid-term if the transition is well planned. This is because the company won't have to expend money or effort keeping physical servers up-to-date, working late into the night training support teams on fire drills, etc.

As with every decision in life, there are pros and cons to moving to the cloud, but it's unquestionable that doing so can help companies save tons of money in the mid-term if the transition is well planned. This is because the company won't have to expend money or effort keeping physical servers up-to-date, working late into the night training support teams on fire drills, etc.

AWS provides several tools to help migrate software to its infrastructure. For more customized installations, most can be done via its web console, IDE plugins and basically everything via a command-line using AWS-SDK. Click here for more details.

Real Use Case

The sample application used to describe the migration steps below is based on a real application that had a consistent CI/CD fully on-premises as follows:

Once the developer committed changes into the Bitbucket Git repository, a Jenkins instance configured to "listen" to the repository would kick the build process whenever there were fresh commits. If everything went right, then a CHEF recipe was invoked on the Linux-based server where the application was to be deployed and exposed to users via NGINX.

It's important to note that the database pointed to by this real application used to be on-premises. Prior to app migration to the cloud, however, the DB was migrated, which proved to be a helpful exercise to start testing AWS on the storage side. Setup adjustments did need to be made, and it would have been much worse if both the DB and the application had been moved in one shot.

The Migration

As mentioned above, this real-world scenario involved a database, which was migrated to RDS with minimum effort since moving it was merely a matter of configuring the remote DB into VPC, and only computers and systems from inside the company VPN had access to it. After the DB was migrated, the app was updated to start pointing to the remote DB.

The first proof of concept was moving the application to AWS Elastic Beanstalk, which was quite straightforward since the effort involved very few resources in terms of the amount of configuration and AWS know-how that were required. (The idea of using Beanstalk was discontinued for this case, but later Beanstalk was used for other, more complex apps since Beanstalk supports multiple platforms.)

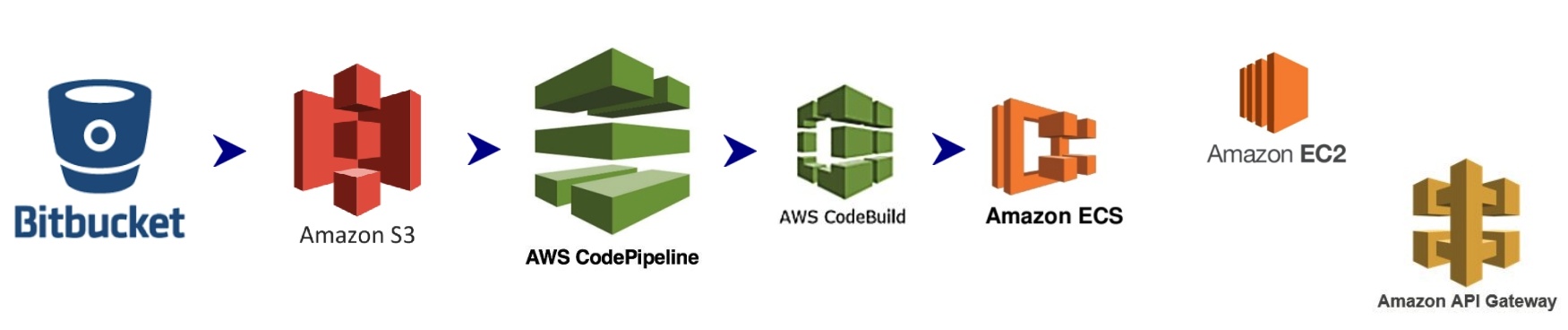

The second and ultimate migration solution for this same application was comprised of the following flow:

> Bitbucket - the Git repository had already been used for the on-premises app flow, but when used in the new flow for AWS, we integrated it by configuring an access token generated in AWS IAM; we also included a webhook, an endpoint also generated on the AWS side, for taking the source code from Bitbucket and bringing it inside Amazon S3.

> Amazon S3 (Simple Storage Service) - We used S3 as our file explorer since it makes it easy to concentrate on the application's source code to be used by any service on the premises of AWS.

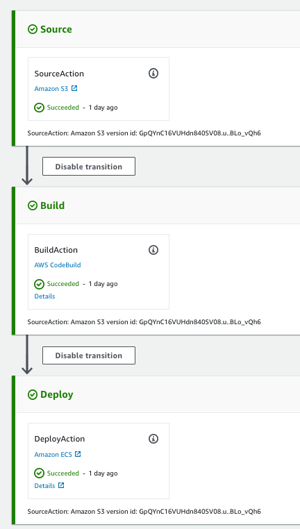

> AWS CodePipeline - This is the AWS CI/CD "plug-n-play," or, as detailed here, it "builds, tests, and deploys your code every time there is a code change, based on the release process models you define."

See below how the pipeline is represented in AWS web console for this case, linking "source + build + deploy:"

> AWS CodeBuild: As its name suggests, AWS CodeBuild is a service for taking care of building applications in a manner similar to Jenkins. (By the way, Jenkins is an option for the CodePipeline build section too, but we chose CodeBuild since it only requires a buildspec.yml committed, along with the project source code, to build and create the application package.)

> Amazon ECS: According to its own webpage:

Amazon Elastic Container Service (Amazon ECS) is a highly scalable, high-performance container orchestration service that supports Docker containers and allows you to easily run and scale containerized applications on AWS. Amazon ECS eliminates the need for you to install and operate your own container orchestration software, manage and scale a cluster of virtual machines, or schedule containers on those virtual machines.

In other words, ECS is amazing and allows us to set up plenty of applications running as services into a limited number of virtual machines, sharing their resources and, in this use case, configured to have always at least one instance of the service running. This means that if one crashes for some reason, the service puts another instance up.

N.B. Relatively recently, Fargate was introduced to ECS, and it simplifies life a lot: whereas ECS requires EC2 server and Docker repositories to be set up, Fargate doesn't.

> Amazon EC2: This is the virtual machine where an instance of our application lives. It's equivalent to the on-premises, Linux-based server where it used to live, and in our case it's configured as part of ECS, as described above.

> Amazon API Gateway: As its name suggests, this is where APIs are configured. In this given use case, Amazon API Gateway was used as a workaround for quickly exposing the endpoints over https because a load balancer (ELB) was not set instead.

Sample Application Structure

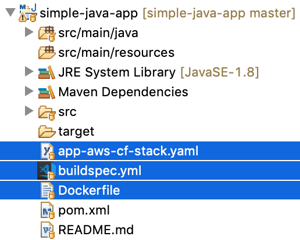

Switching focus to the application, we've highlighted what had to be included in its structure below:

Dockerfile

FROM maven:3.3-jdk-8-onbuild

FROM openjdk:8-jdk-alpine

COPY --from=0 /usr/src/app/target/example-0.0.1-SNAPSHOT.jar /opt/simple-java-app.jar

ARG JAVA_OPTS

ENV JAVA_OPTS ${JAVA_OPTS}

CMD java $JAVA_OPTS -jar /opt/simple-java-app.jar

The above was necessary for making the app portable not only to Amazon ECS but also to any dockerized container system.

This invokes "mvn clean package" for downloading all project dependencies, compiling the code, and building an executable spring boot jar, followed by instructing Docker on how to execute the app.

buildspec.yml

version: 0.2

phases:

pre_build:

commands:

- echo Logging in to Amazon ECR...

- $(aws ecr get-login --no-include-email --region $AWS_DEFAULT_REGION)

build:

commands:

- echo Build started on `date`

- echo Building the Docker image...

- docker build -t $IMAGE_REPO_NAME:$IMAGE_TAG --build-arg JAVA_OPTS=$JAVA_OPTS .

post_build:

commands:

- echo Build completed on `date`

- echo Tagging Docker image...

- docker tag $IMAGE_REPO_NAME:$IMAGE_TAG $AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com/$IMAGE_REPO_NAME:$IMAGE_TAG

- echo Pushing the Docker image...

- docker push $AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com/$IMAGE_REPO_NAME:$IMAGE_TAG

- printf '[{"name":"simple-java-app","imageUri":"%s"}]' $AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com/$IMAGE_REPO_NAME:$IMAGE_TAG > imagedefinitions.json

- aws ecs stop-task --cluster $CLUSTER_NAME --task $(aws ecs list-tasks --cluster $CLUSTER_NAME --service $IMAGE_REPO_NAME --output text --query taskArns[0])

artifacts:

files: imagedefinitions.json

This is equivalent to the CHEF recipe in the on-premises CI/CD invoked by Jenkins. Unsurprisingly, it's the "recipe" used by AWS CodeBuild for understanding what to do with the Dockerfile and how to install it in ECS for preparing the application for deployment.

CloudFormation Template

AWSTemplateFormatVersion: 2010-09-09

Parameters:

......

Resources:

Pipeline:

Type: 'AWS::CodePipeline::Pipeline'

Properties:

Name: !Sub '${AppName}-${Environment}-pipeline'

RoleArn: !Sub '${PipelineRole}'

ArtifactStore:

Type: S3

Location: !Sub '${PipelineLocation}'

Stages:

- Name: Source

Actions:

- Name: SourceAction

ActionTypeId:

Category: Source

Owner: AWS

Provider: S3

Version: 1

RunOrder: 1

Configuration:

PollForSourceChanges: true

S3Bucket: !Sub '${S3Bucket}'

S3ObjectKey: !Sub '${S3BucketKey}'

OutputArtifacts:

- Name: !Sub '${AppName}-${Environment}-source'

- Name: Build

Actions:

- Name: BuildAction

ActionTypeId:

Category: Build

Owner: AWS

Provider: CodeBuild

Version: 1

RunOrder: 1

Configuration:

ProjectName: !Sub '${AppName}-${Environment}-build'

InputArtifacts:

- Name: !Sub '${AppName}-${Environment}-source'

OutputArtifacts:

- Name: !Sub '${AppName}-${Environment}-image'

- Name: Deploy

Actions:

- Name: DeployAction

ActionTypeId:

Category: Deploy

Owner: AWS

Provider: ECS

Version: 1

RunOrder: 1

Configuration:

FileName: imagedefinitions.json

ClusterName: !Ref ECSClusterName

ServiceName: !Ref AppName

InputArtifacts:

- Name: !Sub '${AppName}-${Environment}-image'

DependsOn:

- CodeBuildProject

CodeBuildProject:

Type: 'AWS::CodeBuild::Project'

Properties:

Name: !Sub '${AppName}-${Environment}-build'

ServiceRole: !Sub '${PipelineRole}'

Source:

Type: CODEPIPELINE

Artifacts:

Type: CODEPIPELINE

Environment:

Type: LINUX_CONTAINER

ComputeType: BUILD_GENERAL1_SMALL

Image: 'aws/codebuild/docker:17.09.0'

EnvironmentVariables:

- Name: AWS_ACCOUNT_ID

Value: !Sub '${AWSAccountID}

- Name: AWS_DEFAULT_REGION

Value: !Sub '${AWSRegion}'

- Name: IMAGE_REPO_NAME

Value: !Sub '${AppName}'

- Name: IMAGE_TAG

Value: !Sub '${Environment}'

- Name: CLUSTER_NAME

Value: !Sub '${ECSClusterName}'

- Name: JAVA_OPTS

Value: !Sub '-Dspring.profiles.active=${Environment}'

Tags:

- Key: app-name

Value: !Ref AppName

Finally and most importantly, this is the template for generating the pipeline and all resources with AWS CloudFormation, which is not used in the application CI/CD but rather for creating and recreating it whenever needed. It's particularly interesting given that if any step goes wrong, deleting the stack created in CloudFormation rolls back all resources needed for applications. This means unnecessary costs are avoided. It also mean that if we realize other projects using the same structure need to be moved to AWS, it's ready for use with only a few changes.

Conclusion

Together, we have demystified the process of building and deploying a sample application into the AWS cloud using a real use case. Such an exercise involves detailing multiple concepts sometimes taken for granted, but it's important to do so in order to properly determine the CI/CD toolchain for steps that have already been defined and especially for steps that haven't been defined.

I neglected to mention earlier that an alternative migration option is to break the application into microservices with AWS Lambda, which is quite challenging if not planned in advance and done incrementally if you're working with a production monolith-based app. You can read more about this option here.

Our next post will be written for serverless applications' CI/CD in AWS, so stay tuned!

Do you have migration use cases of your own? Please don't hesitate to share them with us! You may also download our sample app here.