In 2019, I worked at an investment bank in the city of São Paulo. Every day, our systems processed millionaire transactions, and our general motto was "stability and security."

One of the precautions we took was to prevent DDoS (Denial of Service) attacks. Although cloud platforms guarantee they will not have DDoS problems because their systems are robust and scalable to handle a large number of requests (with a large amount of money), we did not want the bank to spend an absurd amount of money if they suffered such an attack.

The idea

We held a meeting to discuss ideas and possibilities, and the winning idea was to cache users' sessions with a request counter. Users will be allowed to make a maximum of 50 requests in one minute.

We did not choose these numbers arbitrarily. Instead, we monitored the sessions of several users to determine the average time of use of the client's application, the number of requests made by each client, and the time taken to make those requests. When a user logs in, a token is generated to identify them until they log out. This token can be used as a cache key, which does not change until logout, to determine how many requests this user has made within one minute.

Tools

Cache

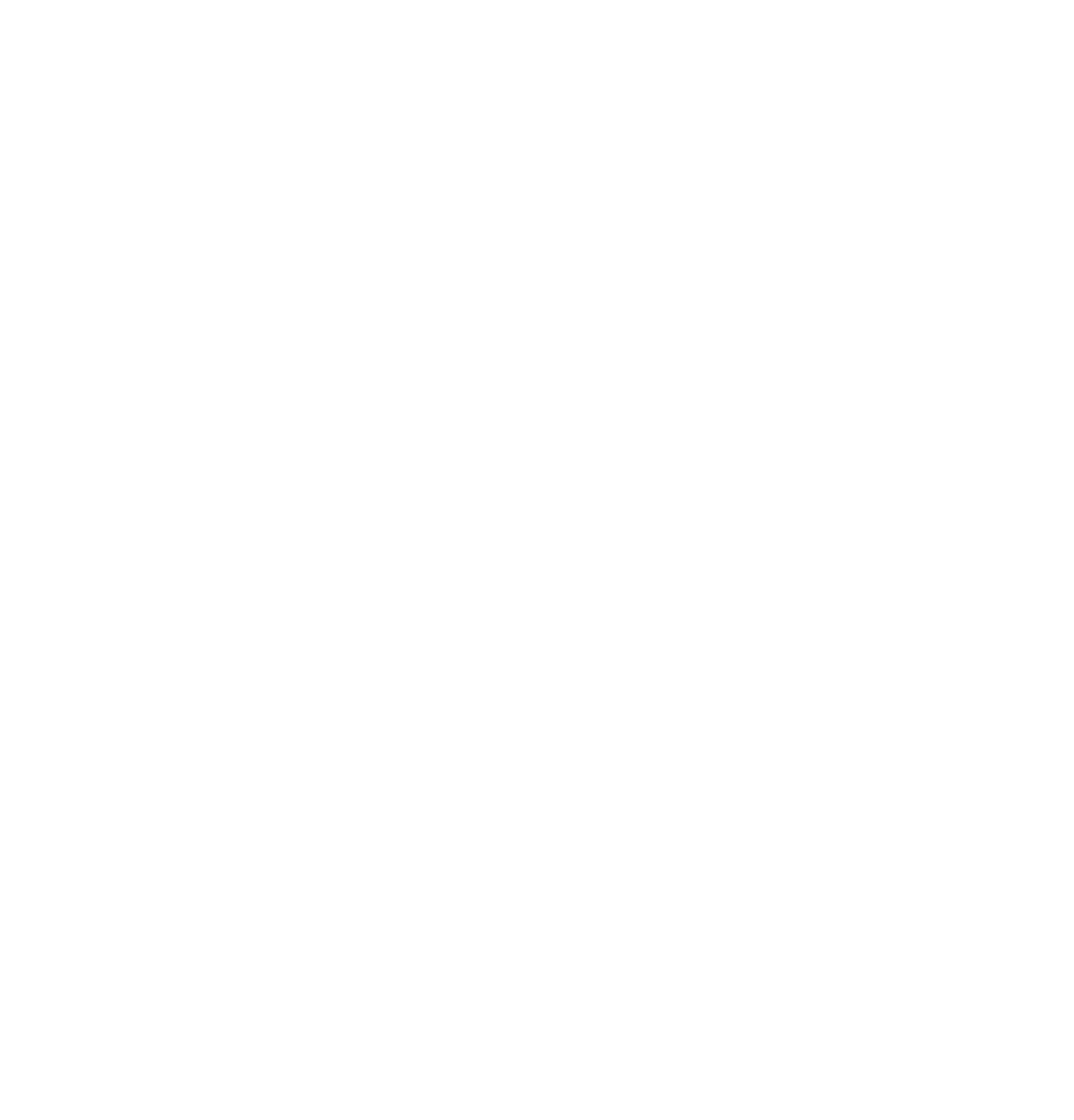

We chose Redis Cache for our caching needs because it is simple to configure a property called Time to Live (TTL), also known as the expiration time. After the expiration time is reached, the cached user session is automatically deleted and must be created again, resetting the counter.

Both Azure and AWS allow you to configure the TTL in Redis, and documentation is available for both platforms.

Middleware

When it comes to analyzing all requests that go through our APIs, we may wonder whether we should copy our validator in all APIs or create a library and add its code to all APIs. Alternatively, we could use the connection configuration in a JSON file so that each environment has its own values during the build pipeline. All these ideas are valid and can be used for each case. However, the one thing that is certain is that it will be a middleware.

For those who are not familiar with middleware, it is a code that stays in the middle of whatever you need. In our case, it is between the requests that arrive at the API.

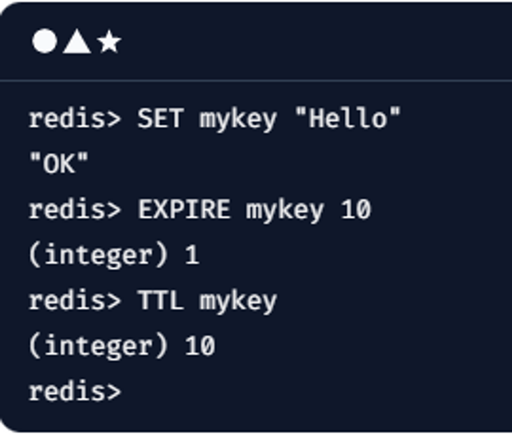

The role of the middleware will be to:

- Check if the user already has a session. If not, create it with a counter with an initial value and a TTL value equal to 60.

- If the key / session already exists in the cache, recover this value associated with the key.

- Compare the value with the maximum limit allowed, which in our case is 50.

- Define the future of the request, whether it ends or continues.

- If proceeding, add one more to the counter value.

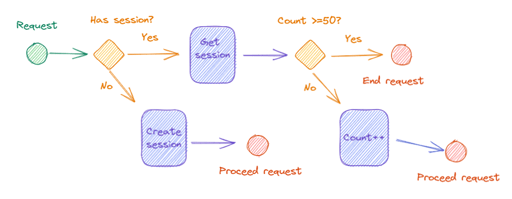

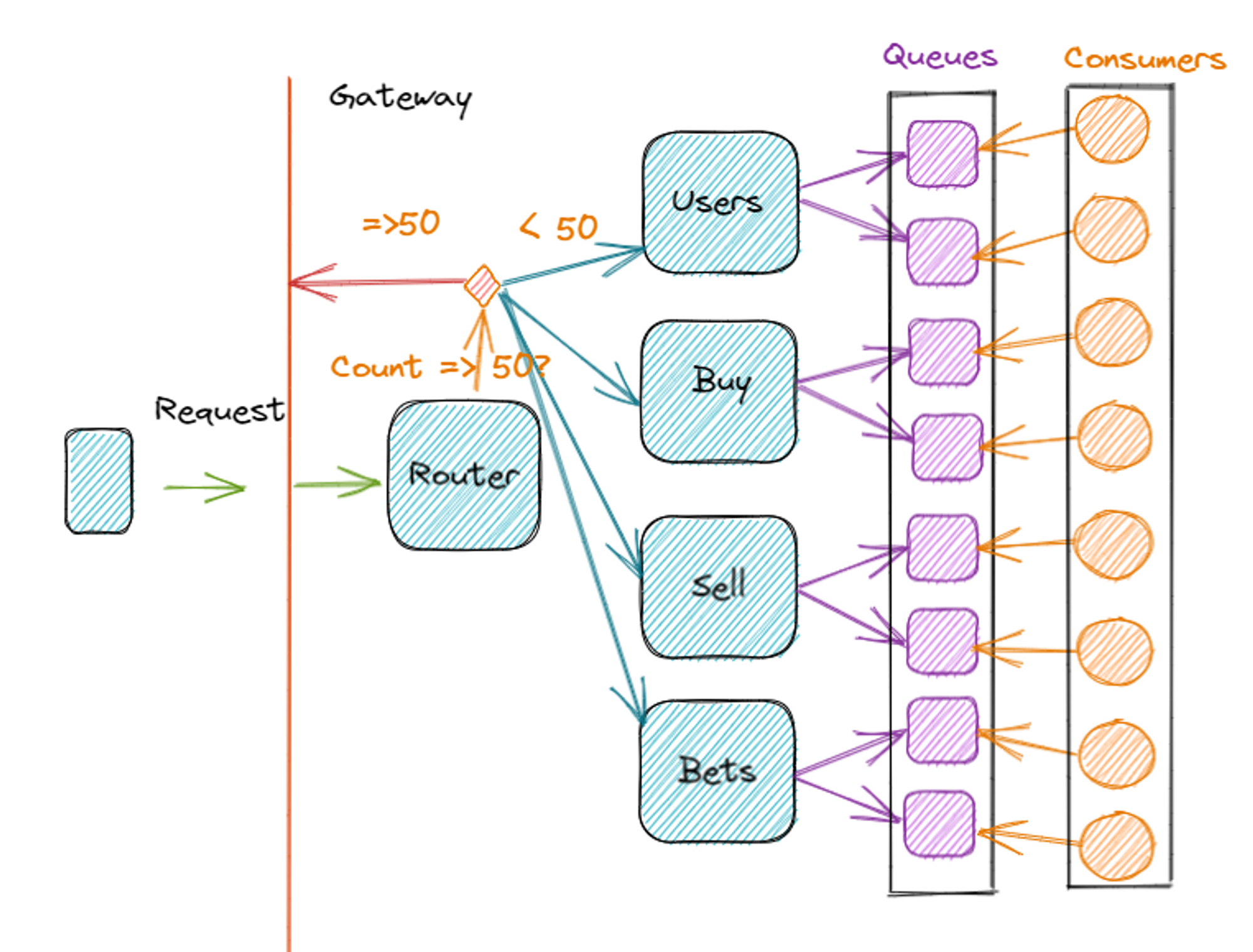

Router API

This API functions as a router, defining which other APIs are involved in resolving the received request. Acting as a gatekeeper, it is part of the gateway, as all requests hit it first, leaving the APIs that actually have access to the system's data in an inner layer. Middleware will be installed on this Router API.

The Router API's functionality depends heavily on the architectural decision in place, whether it is Event Driven, BFF, or even monolithic. If implementation is not possible, it may be worth reconsidering the maximum number of requests per defined unit of time. If your frontend makes multiple requests on multiple APIs to build a screen, and middleware must be installed on all APIs, the number of requests from each user will always be high.

The End

We increased the bank's security while incurring only a minimal increase in cloud costs and API response times. As a result, the bank's customers can now enjoy even faster service.

Advantages

- When using these tools, all requests will go through validation with the cache in the Router API, which saves all internal APIs, databases, queues, and consumers.

- In the event of an attack, it will be possible to determine which logins, passwords, and tokens of which users are being used. This makes it possible to handle the scenario of each client separately and alert them if they were possibly hacked.

- In an auto-scaling scenario, the only resource that will need to scale up is the router API, rather than the entire system.

Drawbacks

- Querying the cache on every request may slightly increase the system's response time.

- The cost associated with the cloud may increase slightly.

References

Middleware. Available at <https://learn.microsoft.com/en-us/aspnet/core/fundamentals/middleware/?view=aspnetcore-7.0>. Accessed on February 14, 2023.

Redis. Available at <https://redis.io/commands/expire/>. Accessed on February 14, 2023.

Azure. Available at <https://learn.microsoft.com/en-us/azure/azure-cache-for-redis/cache-best-practices-memory-management>. Accessed on February 14, 2023.

AWS. Available at <https://docs.aws.amazon.com/AmazonElastiCache/latest/red-ug/Strategies.html>. Accessed on February 14, 2023.

Backend for Frontend. Available at <https://medium.com/mobilepeople/backend-for-frontend-pattern-why-you-need-to-know-it-46f94ce420b0>. Accessed on February 14, 2023.

Event. Driven Architecture. Available at <https://learn.microsoft.com/en-us/azure/architecture/guide/architecture-styles/event-driven>. Accessed on February 14, 2023.

Author

Cristiano Guerra

Software Developer at Avenue Code. Cristiano is encouraged by development and raising new things that add value or swallow good experiences for other people.